牛客网算法——名企高频面试题143题(13)

本文共 8503 字,大约阅读时间需要 28 分钟。

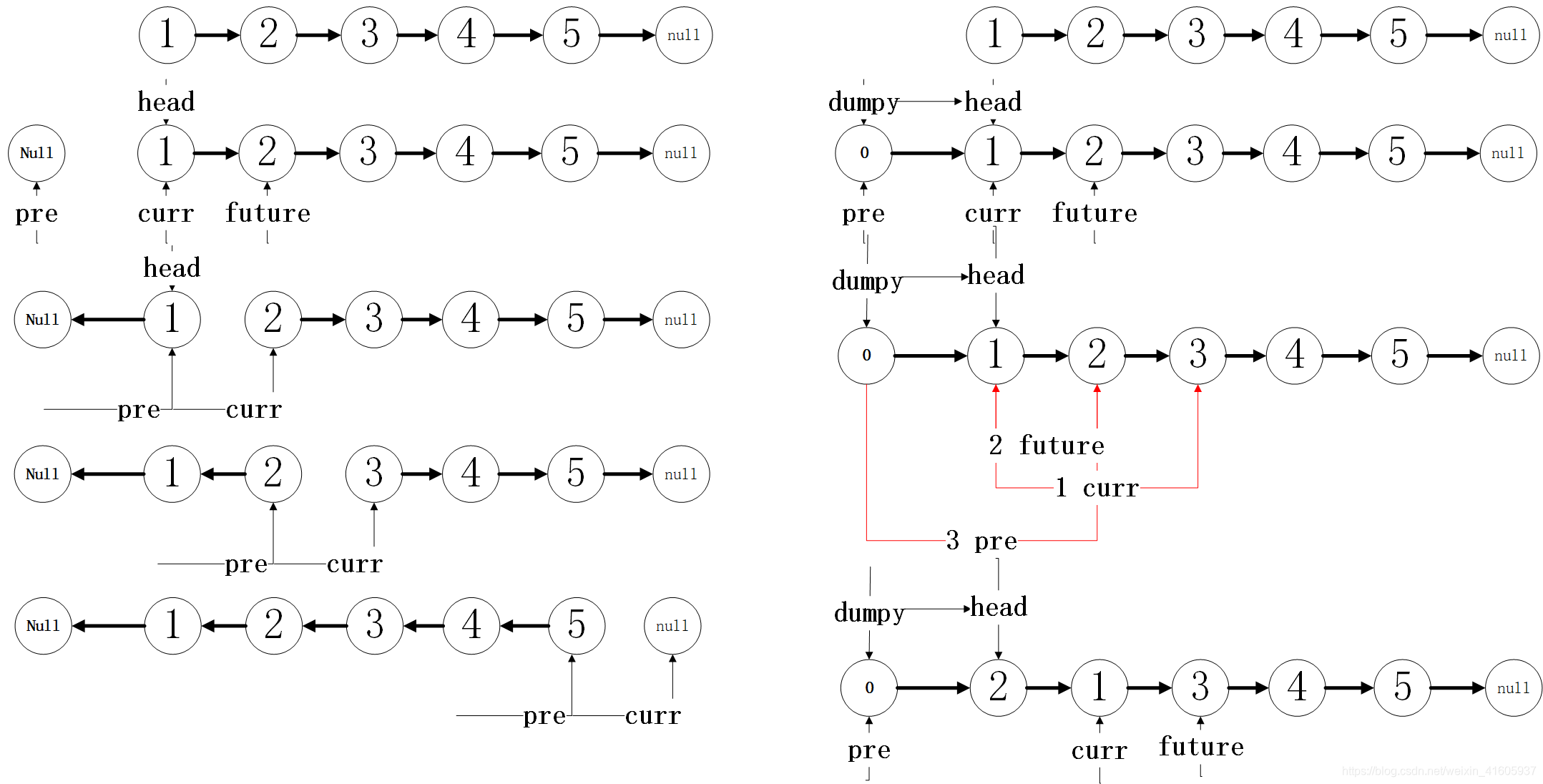

反转链表

package 复现代码;import org.junit.Test;/** * @Classname 反转链表II * @Description TODO * @Date 2020/12/21 12:56 * @Created by xjl */public class 反转链表II { public class ListNode { int val; ListNode next; public ListNode(int val) { this.val = val; } } public ListNode rever(ListNode head) { if (head == null) { return null; } ListNode pre = null; ListNode curr = head; while (curr != null) { ListNode future = curr.next; curr.next = pre; pre = curr; curr = future; } return pre; } public ListNode rever1(ListNode head) { if (head == null) { return null; } ListNode dumy = new ListNode(0); dumy.next = head; ListNode pre = dumy; ListNode curr = head; while (curr.next != null) { ListNode future = curr.next; curr.next = future.next; future.next = dumy.next; pre.next = future; } return dumy.next; } @Test public void test() { ListNode s1 = new ListNode(1); ListNode s2 = new ListNode(2); ListNode s3 = new ListNode(3); ListNode s4 = new ListNode(4); ListNode s5 = new ListNode(5); s1.next = s2; s2.next = s3; s3.next = s4; s4.next = s5; ListNode res = rever1(s1); while (res != null) { System.out.print(res.val + " "); res = res.next; } }} 链表判断时候有环

package 复现代码;/** * @Classname 判断链表时候有环 * @Description TODO * @Date 2020/12/21 14:38 * @Created by xjl */public class 判断链表时候有环 { public class ListNode { int val; ListNode next; public ListNode(int val) { this.val = val; } } public boolean cycle(ListNode head) { if (head == null) { return false; } ListNode fast = head; ListNode slow = head; while (fast != null && fast.next != null) { fast=fast.next.next; slow=slow.next; if (fast==slow){ return true; } } return false; }} 链表判断环入口

package 复现代码;/** * @Classname 判断环的入口 * @Description TODO * @Date 2020/12/21 14:44 * @Created by xjl */public class 判断环的入口 { public class ListNode { int val; ListNode next; public ListNode(int val) { this.val = val; } } public ListNode test(ListNode head) { if (head == null) { return null; } ListNode fast = head; ListNode slow = head; while (fast != null && fast.next != null) { fast = fast.next.next; slow = slow.next; if (slow == fast) { //表示有环 ListNode start = head; ListNode end = slow; while (start != end) { start = start.next; end = end.next; } return start; } } return null; }} 合并链表

package 复现代码;/** * @Classname 合并链表 * @Description TODO * @Date 2020/12/21 14:53 * @Created by xjl */public class 合并链表 { public class ListNode { int val; ListNode next; public ListNode(int val) { this.val = val; } } /** * @description TODO 链表的都是有序的 * @param: head1 * @param: head2 * @date: 2020/12/21 14:58 * @return: 复现代码.合并链表.ListNode * @author: xjl */ public ListNode merge(ListNode head1, ListNode head2) { if (head1 == null && head2 != null) { return head2; } if (head1 != null && head2 == null) { return head1; } if (head1 == null && head2 == null) { return null; } ListNode dumpy=new ListNode(0); ListNode curr=dumpy; ListNode curr1 = head1; ListNode curr2 = head2; while (curr1 != null && curr2 != null) { if (curr1.val>=curr2.val){ curr.next=curr2; curr2=curr2.next; }else { curr.next=curr1; curr1=curr1.next; } curr=curr.next; } while (curr1!=null){ curr.next=curr1; curr=curr.next; curr1=curr.next; } while (curr2!=null){ curr.next=curr2; curr=curr.next; curr2=curr.next; } return dumpy.next; }} 删除链表中的重复的元素I

package 复现代码;import org.junit.Test;/** * @Classname 删除链表的重复元素 * @Description TODO * @Date 2020/12/21 15:06 * @Created by xjl */public class 删除链表的重复元素 { public class ListNode { int val; ListNode next; public ListNode(int val) { this.val = val; } } public ListNode delete(ListNode head) { if (head == null) { return head; } ListNode curr = head; while (curr.next != null) { if (curr.val == curr.next.val) { curr.next = curr.next.next; } else { curr = curr.next; } } return head; } public ListNode de(ListNode head) { if (head == null) { return null; } ListNode curr = head; while (curr != null && curr.next != null) { ListNode future = curr.next; if (future.val == curr.val) { curr.next = future.next; }else { curr = curr.next; } } return head; } @Test public void test() { ListNode s1 = new ListNode(1); ListNode s2 = new ListNode(1); ListNode s3 = new ListNode(2); ListNode s4 = new ListNode(3); ListNode s5 = new ListNode(3); s1.next = s2; s2.next = s3; s3.next = s4; s4.next = s5; ListNode res = delete(s1); while (res != null) { System.out.print(res.val + " "); res = res.next; } }} 删除链表中的重复的元素II

package 名企高频面试题143;import org.junit.Test;/** * @Classname 链表删除所有重复元素 * @Description TODO * @Date 2020/12/18 10:03 * @Created by xjl */public class 链表删除所有重复元素 { /** * @description TODO 删除的是的链表的重复的元素 * @param: head * @date: 2020/12/18 10:04 * @return: ListNode * @author: xjl */ public ListNode deleteDuplicates(ListNode head) { if (head == null){ return null; } ListNode dumy = new ListNode(0); dumy.next = head; ListNode pre = dumy; ListNode curr = head; while (curr != null && curr.next != null) { ListNode future = curr.next; if (future.val != curr.val) { pre = pre.next; curr = curr.next; } else { while (future != null && future.val == curr.val) { future = future.next; } pre.next = future; curr = future; } } return dumy.next; } public ListNode deleteDuplicates2(ListNode head) { ListNode dummy = new ListNode(-1); dummy.next = head; ListNode prev = dummy; ListNode curr = head; while (curr != null && curr.next != null) { if (curr.val == curr.next.val) { ListNode future = curr.next; while (future != null && future.val == curr.val) { future = future.next; } prev.next = future; curr = future; } else { prev = prev.next; curr = curr.next; } } return dummy.next; } @Test public void test() { ListNode node1 = new ListNode(1); ListNode node2 = new ListNode(1); ListNode node3 = new ListNode(1); ListNode node4 = new ListNode(1); ListNode node5 = new ListNode(1); ListNode node6 = new ListNode(4); ListNode node7 = new ListNode(5); ListNode node8 = new ListNode(6); node1.next = node2; node2.next = node3;// node3.next = node4;// node4.next = node5;// node5.next = node6;// node6.next = node7;// node7.next = node8; ListNode res = deleteDuplicates(node1); while (res != null) { System.out.print(res.val + " "); res = res.next; } } public class ListNode { int val; ListNode next; public ListNode(int val) { this.val = val; } }}

转载地址:http://wqch.baihongyu.com/

你可能感兴趣的文章

NHibernate异常:No persister for的解决办法

查看>>

NIFI1.21.0_Mysql到Mysql增量CDC同步中_日期类型_以及null数据同步处理补充---大数据之Nifi工作笔记0057

查看>>

NIFI1.21.0_NIFI和hadoop蹦了_200G集群磁盘又满了_Jps看不到进程了_Unable to write in /tmp. Aborting----大数据之Nifi工作笔记0052

查看>>

NIFI1.21.0通过Postgresql11的CDC逻辑复制槽实现_指定表多表增量同步_增删改数据分发及删除数据实时同步_通过分页解决变更记录过大问题_02----大数据之Nifi工作笔记0054

查看>>

NIFI1.23.2_最新版_性能优化通用_技巧积累_使用NIFI表达式过滤表_随时更新---大数据之Nifi工作笔记0063

查看>>

NIFI从MySql中增量同步数据_通过Mysql的binlog功能_实时同步mysql数据_根据binlog实现数据实时delete同步_实际操作04---大数据之Nifi工作笔记0043

查看>>

NIFI从MySql中增量同步数据_通过Mysql的binlog功能_实时同步mysql数据_配置binlog_使用处理器抓取binlog数据_实际操作01---大数据之Nifi工作笔记0040

查看>>

NIFI从MySql中增量同步数据_通过Mysql的binlog功能_实时同步mysql数据_配置数据路由_实现数据插入数据到目标数据库_实际操作03---大数据之Nifi工作笔记0042

查看>>

NIFI从MySql中离线读取数据再导入到MySql中_03_来吧用NIFI实现_数据分页获取功能---大数据之Nifi工作笔记0038

查看>>

NIFI从MySql中离线读取数据再导入到MySql中_无分页功能_02_转换数据_分割数据_提取JSON数据_替换拼接SQL_添加分页---大数据之Nifi工作笔记0037

查看>>

NIFI从PostGresql中离线读取数据再导入到MySql中_带有数据分页获取功能_不带分页不能用_NIFI资料太少了---大数据之Nifi工作笔记0039

查看>>

nifi使用过程-常见问题-以及入门总结---大数据之Nifi工作笔记0012

查看>>

NIFI分页获取Mysql数据_导入到Hbase中_并可通过phoenix客户端查询_含金量很高的一篇_搞了好久_实际操作05---大数据之Nifi工作笔记0045

查看>>

NIFI同步MySql数据_到SqlServer_错误_驱动程序无法通过使用安全套接字层(SSL)加密与SQL Server_Navicat连接SqlServer---大数据之Nifi工作笔记0047

查看>>

Nifi同步过程中报错create_time字段找不到_实际目标表和源表中没有这个字段---大数据之Nifi工作笔记0066

查看>>

NIFI大数据进阶_FlowFile拓扑_对FlowFile内容和属性的修改删除添加_介绍和描述_以及实际操作---大数据之Nifi工作笔记0023

查看>>

NIFI大数据进阶_NIFI的模板和组的使用-介绍和实际操作_创建组_嵌套组_模板创建下载_导入---大数据之Nifi工作笔记0022

查看>>

NIFI大数据进阶_NIFI监控的强大功能介绍_处理器面板_进程组面板_summary监控_data_provenance事件源---大数据之Nifi工作笔记0025

查看>>

NIFI大数据进阶_NIFI集群知识点_认识NIFI集群以及集群的组成部分---大数据之Nifi工作笔记0014

查看>>

NIFI大数据进阶_NIFI集群知识点_集群的断开_重连_退役_卸载_总结---大数据之Nifi工作笔记0018

查看>>